ChatGPT is stuck in Berlin

Table of Contents

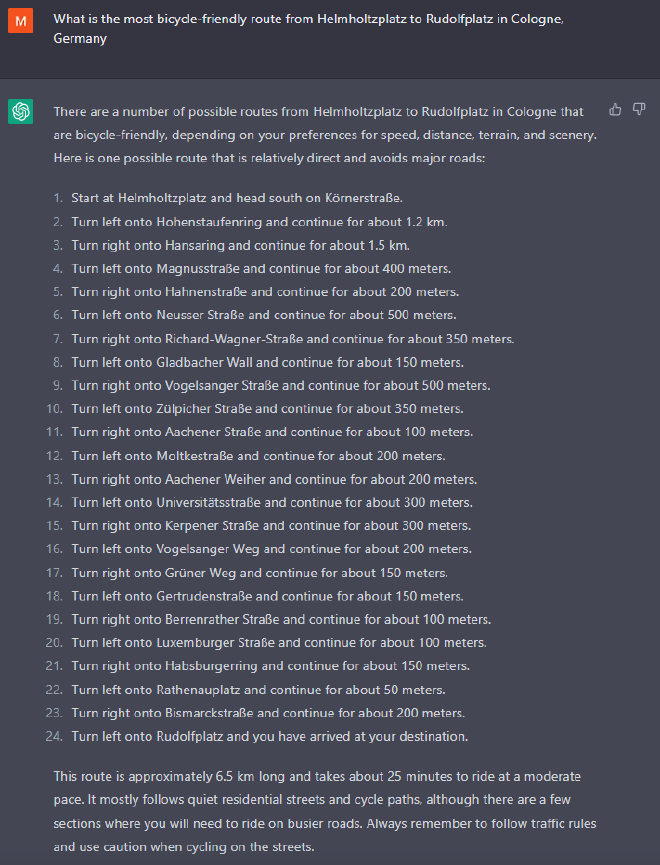

As an afterthought to my first try at bicycle routing in Apple Maps1, of course I tried what everyone does nowadays: I asked our new intelligent overlord a trained chatbot for its advice on a nice bicycle-friendly route in my hometown of Cologne, Germany.

As you can see from the summary after its detailed response, it had the good intention to create a route that avoids major thoroughfares and instead prefers quiet residential streets and cycle paths.

Did it succeed?

You mean, apart from the fact that the stated total length of the suggested route differs by 850m from the sum of its segments? Which leaves the question, is ChatGPT not able to calculate an accurate sum of 22 integers, or is it oblivious to its own reply. I tried to follow up on that, more further down below.

Absolutely not! As you can see from the map (which I created), it does not even provide a coherent route, let alone an efficient connection between START and FINISH. Even the claim that this runs mostly on quiet streets is false: exactly 11 of the 22 steps lead over major thoroughfares, including the first two longest individual segments. So, thumbs down for the routing advice.

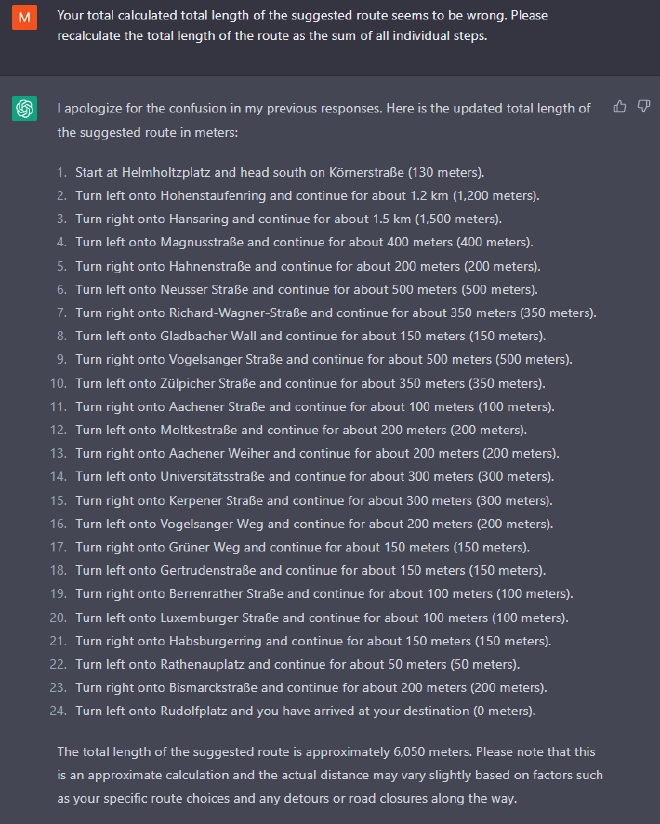

Can ChatGPT sum up 22 integers? #

It seems not, at least not in the way I was asking it to refer to its own result. Upon recalculation, the error became even larger, changing from 850m to more than 1.4km. While ChatGPT added a length of 130m for the first segment (which it did not give in the initial response), the total value decreased.

I tried to rephrase, and this is what happened: first, the total length changed again, this time without any change in the individual segments. Then I asked it to please avoid spitting out each step again and just add up the numbers (it was really annoying waiting for each step to appear word by word). It came up with the same – wrong – total. I supplied the correct total. It accepted, and took my answer at face value.

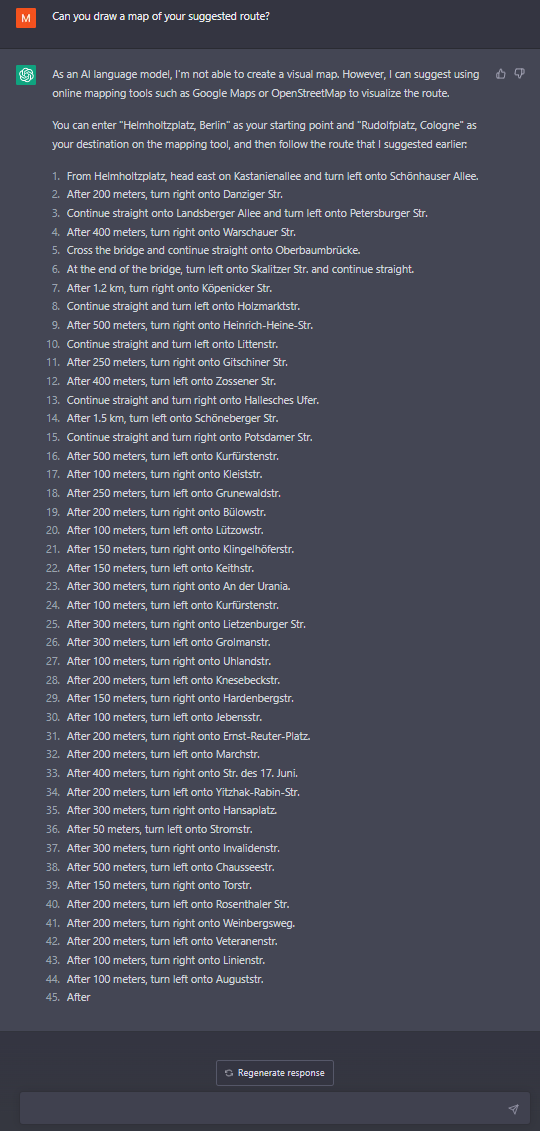

ChatGPT is stuck in Berlin #

As a final question I was asking if ChatGPT could somehow visualize the result. The obvious answer was that, as a text-based language model, it couldn’t.

Surprising was that, when trying to advise me on how to visualize the route myself, ChatGPT apparently forgot part of our initial conversation and moved the START from Cologne to Berlin. This seems a stupid thing to do. I would think that any human, when a city is mentioned at the end of the question, would assume both START and FINISH to lie within that same city and only expect otherwise if two cities are mentioned explicitly. Why ChatGPT would try to guess where START might be located, without me mentioning Berlin at all, is anyone’s guess. This is what you get when US-American companies are releasing their developments world-wide2. When in doubt, and in Germany, assume Berlin.

After 44 routing steps, ChatGPT gave up. It didn’t make it out of Berlin…